Research | Open Access | Volume 8 (3): Article 48 | Published: 03 Jul 2025

Rotavirus surveillance system evaluation, Kilifi Hospital, Kenya

Ednah Salat1,&, Maria Nunga1, Ahmed Abade1, Emmanuel Okunga2

1Field Epidemiology and Training Program, Nairobi, Kenya, 2Division of Disease Surveillance and Response, Ministry of Health, Nairobi, Kenya

&Corresponding author: Ednah Salat, Field Epidemiology and Training Program, Nairobi, Kenya, Email: ednatonui38@gmail.com

Received: 30 May 2024, Accepted: 03 Jul 2025, Published: 03 Jul 2025

Domain: Infectious Disease Epidemiology, Surveillance System Evaluation

Keywords: Rotavirus, Surveillance system, Vaccination, Diarrhoea

©Ednah Salat et al Journal of Interventional Epidemiology and Public Health (ISSN: 2664-2824). This is an Open Access article distributed under the terms of the Creative Commons Attribution International 4.0 License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Cite this article: Ednah Salat et al Rotavirus surveillance system evaluation, Kilifi Hospital, Kenya. Journal of Interventional Epidemiology and Public Health. 2025;8:48. https://doi.org/10.37432/jieph.2025.8.3.171

Abstract

Introduction: Rotavirus is one of the causes of acute diarrhea among children under five years. Diarrhea causes 9·9% of the 6·9 million deaths worldwide among children in this age group. The rotavirus surveillance system is aimed at determining the disease burden, and epidemiology of the virus, and monitoring the impact of vaccination. The rotavirus surveillance system was piloted at Kilifi County Hospital, Kenya in 2009 and has been active to date. The system at this site has never been evaluated. We sought to evaluate its performance.

Methods: We reviewed records for children aged under five years admitted to Kilifi Hospital. We collected sociodemographic and clinical information. We developed a semi-structured questionnaire and interviewed stakeholders from both national and facility levels to assess the system attributes using updated CDC guidelines. The qualitative attributes were evaluated using the five-point Likert Scale after interviewing the key stakeholders, whereas the descriptive attributes and other variables were described in terms of proportions and percentages. For ranking and scoring of qualitative data: poor (<60%) average (60% to <80%) and good (≥80%).

Results: We reviewed 1,184 records of suspected cases from the database. Males were 690 (58.7%) and those below the age of 12 months were 647 (54.7%). Altered level of consciousness contributed to 598(51%) of presenting symptoms among suspected cases. The system attributes scores were: usefulness (74%), flexibility (67%), stability (61%), simplicity (75%), acceptability (78%), Timeliness (95%), sensitivity (11.5%) and completeness (data quality) (41%).

Conclusion: The RVSS at Kilifi County Hospital effectively monitors rotavirus trends but faces challenges in data quality, burden estimation, and financial sustainability. We recommend mentorship on data management, regular data quality audits at the facility and address gaps in funding, to enhance its role in informing immunization policy.

Introduction

Globally, diarrhea is the second leading cause of death worldwide among children under five years of age [1]. It is thought to be responsible for 9.9% of deaths in children under five, with Africa and Asia accounting for around 80% of these deaths. In 15 countries, three-quarters of these deaths are attributed to childhood diarrhea.[2]. In Kenya, rotavirus infection causes about 4471 deaths yearly accounting for 19% of hospital admissions and 16% of clinic visits for diarrhea in children under the age of five[3].

The widespread immunization against rotavirus in children has shown a significant positive effect on severe diarrhea and rotavirus sickness, necessitating hospitalization[4]. Rotavirus vaccination is considered an effective public health strategy to prevent infection and reduce the severity of the disease. The Kenya Expanded Program on Immunization (KEPI) introduced the Monovalent Rotavirus vaccine Rotarix (GlaxoSmithKline) to the routine immunization program in July 2014. The vaccine is given as two oral doses at 6 weeks and 10 weeks [5]. The burden for rotavirus and all-cause diarrhea declined substantially among Kenyan children after the introduction of the rotavirus vaccine.[6].

The rotavirus surveillance system in Kilifi County Referral Hospital (KCH) was initiated in 2009. The main aim was to collect data to facilitate and support the introduction of the rotavirus vaccination into routine immunization in Kenya [6]. The rotavirus surveillance system is important in determining the disease burden and epidemiology of the virus and monitoring the impact of vaccination. Kenya has two active rotavirus sentinel sites at Kenyatta National Hospital and Kilifi County Referral Hospital. The Rotavirus Surveillance System (RVSS) in Kilifi County Hospital has never been evaluated. This study sought to determine the performance of the RVSS at KCH as a routine evaluation of surveillance systems to identify its strengths and weaknesses in implementation.

Methods

Evaluation design

The updated Center for Disease Control and Prevention (CDC) guidelines[7] on evaluating a public health surveillance system were used to evaluate the RVSS using a cross-sectional study design. We assessed the qualitative indicators for attributes associated with simplicity, stability, flexibility, and acceptability through key informant in-depth interviews with stakeholders at the national level (Division of Disease Surveillance and Response) and semi-structured questionnaires with the sentinel site coordinators. The semi-structured questionnaires were validated before administration. The validation process involved expert review by epidemiologists and public health specialists to ensure content relevance and comprehensibility. A pilot test was conducted with a small subset of participants to assess clarity and consistency, and necessary modifications were made based on feedback before full-scale administration. The indicators for qualitative attributes such as sensitivity, completeness, and timeliness were assessed by reviewing data from the database. The RVSS evaluation for KCH was conducted for 2017-2021.

Evaluation Approach

The RVSS stakeholders at the national level and the facility were interviewed. Five officers at the national level, a sentinel site coordinator, a laboratory manager, and a clinician were included in the study. We did a desk review of the RVSS database, in-depth interviews with the stakeholders at the national level, and semi-structured questionnaires with the site coordinators. We reviewed the database before interviewing the stakeholders to obtain information about the RVSS. Five in-depth interviews were conducted with the stakeholders at the national level to understand the RVSS implementation, as well as its usefulness, flexibility, stability, and strengths and weaknesses. The indicators for the qualitative attributes were developed according to the CDC guidelines. We did a desk review of the data to assess sociodemographic and clinical information and the quantitative attributes (Positive predictive value [PVP], completeness, sensitivity, completeness, and timeliness).

We assessed the quantitative indicators of attributes usefulness, flexibility, and stability of the system using the questions with “yes” or “no” where the answers were scored as 0 or 1, respectively. For acceptability and simplicity of the system, a five-point Likert Scale (1=strongly disagree, 2=disagree, 3=neutral, 4=agree, and 5=strongly agree) was used.

For each indicator, the score percentage was calculated as;

$$\text{Indicator Score (\%)} = \left( \frac{\sum \text{of all respondents’ scores for each indicator}}{\text{Maximum score for indicator} \times \text{Number of respondents}} \right) \times 100$$

The overall attribute score percentage was calculated as:

$$\text{Attribute Score (\%)} = \left( \frac{\sum \text{of all respondents’ scores for all indicators}}{\text{Number of indicators} \times \text{Maximum score per indicator} \times \text{Number of respondents}} \right) \times 100$$

A retrospective review of Rotavirus data was done for five-year period from January 2017 to December 2021. The following variables were abstracted: Patient identification number, age sex, date of admission, clinical symptoms (diarrhea, dehydration, vomiting, and fever), vaccination status for rotavirus, number of vaccine doses, disease outcome, date of sample collection, the test result for rotavirus, specimen adequacy, date specimen sent to the laboratory, date when samples collected for genotyping for ELISA positive tests and genotyping results.

The Positive Predictive Value (PPV) was calculated as the proportion of stool samples that tested positive for rotavirus out of all stool samples tested (i.e., the number of confirmed positive cases divided by the total number of samples tested). In contrast, sensitivity was assessed as the proportion of suspected rotavirus gastroenteritis cases whose stool samples tested positive (i.e., the number of confirmed positive cases divided by the total number of suspected cases who submitted stool samples). This distinction highlights that PPV reflects the likelihood that a positive test result truly indicates infection, while sensitivity measures the system’s ability to detect cases among those suspected. Timeliness was defined as time from specimen collection period to the time the specimen was received in the laboratory. Completeness (data quality) was measured by calculating the percentage of missing variables. For qualitative variables, we graded attributes as poor (<60%), average (60% to <80%) and good (≥80%).

Ethical Approval

Since this was a non-research public health exercise that fit within the mandate of the Ministry of Health, no formal ethical approval was needed. Permission to obtain and use data was obtained from the Ministry of Health through the head of the Division of Disease Surveillance and Response Unit. The objectives of the study were explained to the stakeholders and the site coordinators before participation. Those who agreed that either the interview was conducted or the questionnaire was administered. The data obtained were strictly guarded and used only for surveillance system evaluation.

Results

Description of Rotavirus Surveillance System

The Ministry of Health established the RVSS in 2009 with support from WHO. After the introduction of RV to the immunization program in 2014, the RVSS objectives were updated to include an assessment of the impact of the vaccine on RV morbidity and mortality among children aged under 5 years, as well as the RV epidemiology and circulating strains. It provides a basis for other epidemiological research.

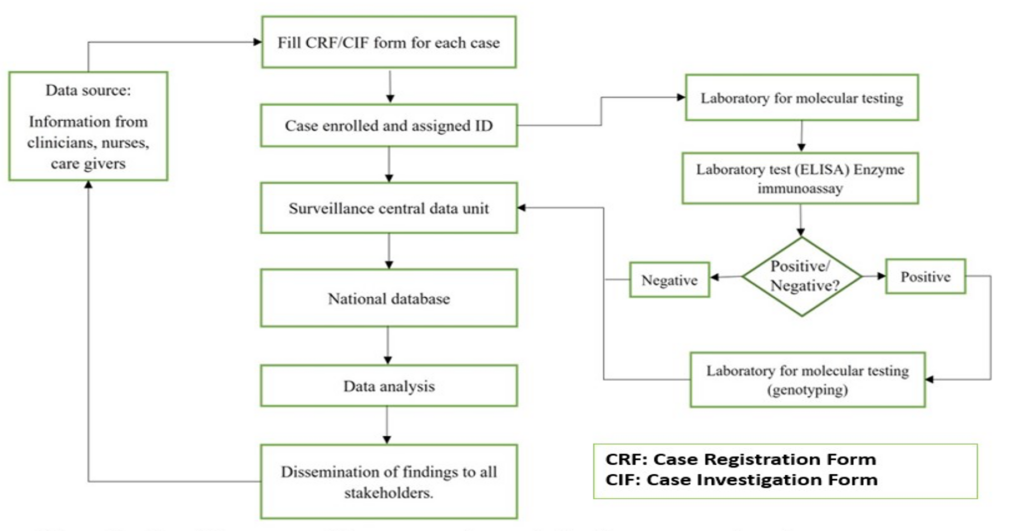

The RVSS involved gathering information on each episode of diarrhea among children under five years. The Department of Pediatrics, the Emergency Room, and the Diarrhea Treatment Centers were among the prospective data sources. A suspected case was a child <5 years presenting with acute watery diarrhea (<14 days), which is defined as three or more loose or watery stools within 24 hours, admitted for diarrhea treatment into the ward or emergency unit in the hospital. The children who were excluded were those presenting with bloody diarrhea and nosocomial infections. A confirmed case was a suspected case with the presence of RV in its stool confirmed by an enzyme immunoassay (EIA) or polymerase chain reaction (PCR) based method. The surveillance system flow chart (Figure 1)

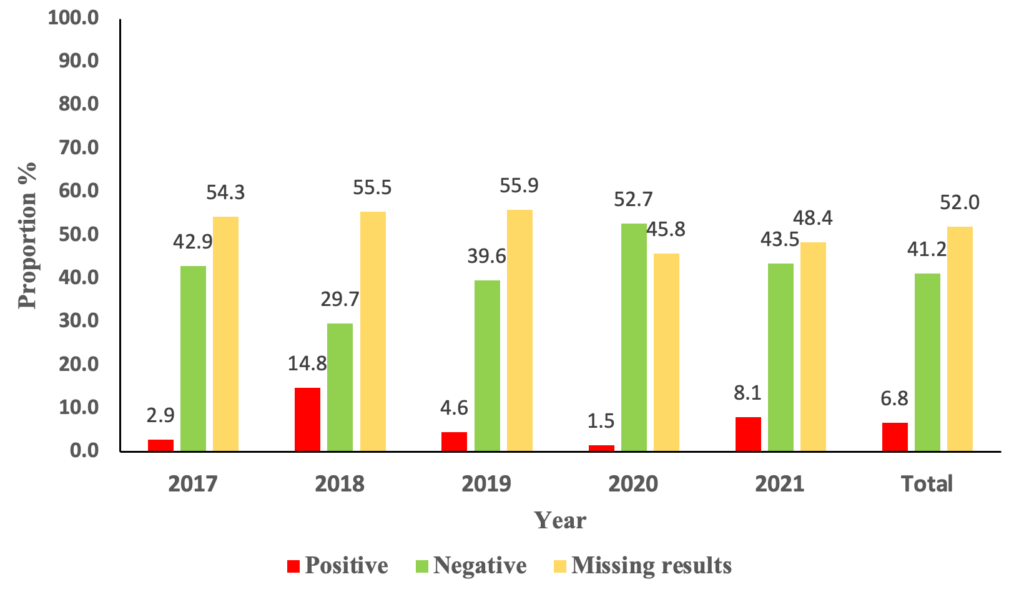

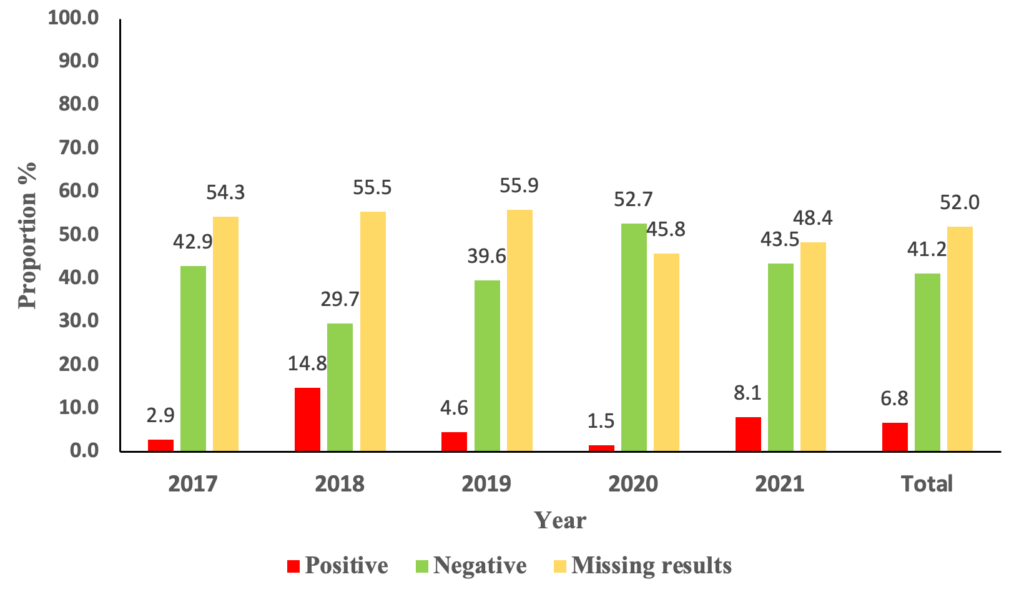

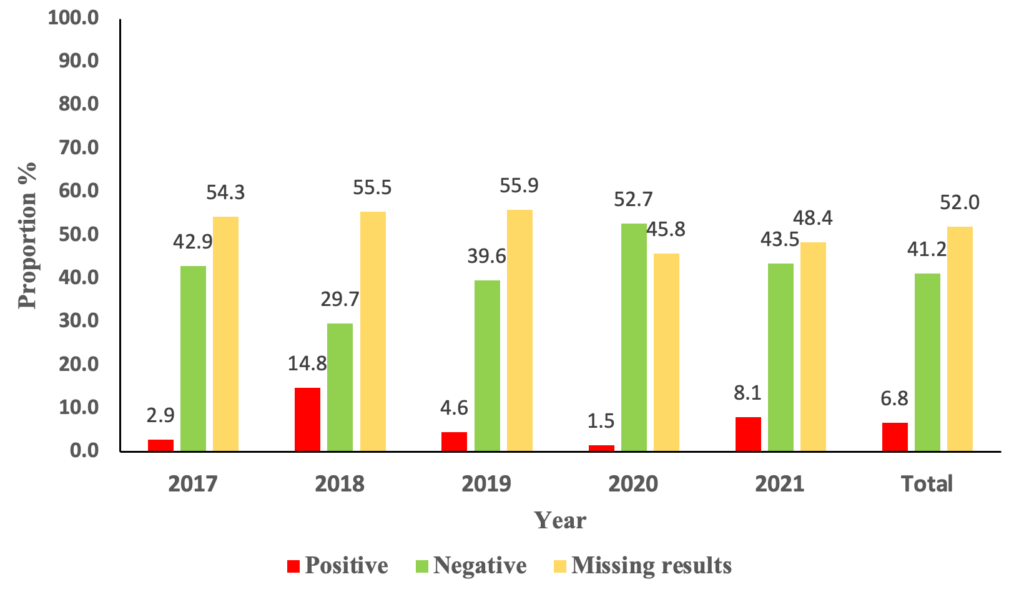

A total of 1,184 suspected cases were identified. The yearly distribution of positive cases was 1/35(2.9%) cases in 2017, 31/209(14.8%)cases in 2018, 19/417(4.6%) cases in 2019, 3/201(1.5%) cases in 2020, and 26/322(8.1%) cases in 2021. (Figure 2) The median age of the suspected cases was 10.9 months, with an interquartile range of 7 and 16.6 months. The majority of the suspected cases were males, 690/1184 (58.28%).

In-depth Interviews with stakeholders at the National level

Usefulness

A total of 5 out of 8 (62.5%) usefulness indicators achieved a good rank, whereas two indicators, estimation of rotavirus magnitude, and mortality and the development of national policy strategy for the national immunization program were ranked poor. The data used as the basis for epidemiological research was rated average. The overall usefulness indicated an average rank (143/200, 74%) (Table 1).

Semi-structured questionnaire for the facility program coordinators

For the indicators measuring flexibility, one indicator had a good rank “Does the system accommodate the change in case definition” (23/25, 92%), while two indicators; accommodate changes in reporting (18/25,72%) and accommodate integration with other surveillance systems (16/25,64%) had an average rank. The indicator on accommodation for change in funding was ranked poor (10/25,40%). The overall rank for flexibility was average (67/100, 67%) (Table 2)

The stability indicators all achieved an average rank. The system outages during the period of review (15/25,60%), stability of system after withdrawal of partner support (15/25,60%), time required for data management (16/25,64%) and release of report and feedback from both levels (15/25,60%). The overall rank for stability was average (61/100, 61%) (Table 3).

For the simplicity indicators, the availability and use of case definition and the availability of reporting tools had a good ranking (15/15,100% and 12/15,80% respectively). The two indicators on training (11/15,73%) and the frequency of training (9/15,60%) scored average scores. However, the time for data collection and transmission was ranked poor (6/15,40%). The overall rank for simplicity was average (75/100, 75%).

Three of the four acceptability indicators achieved good rank. The participants felt that the system was meeting its intended purpose and were willing to participate (13/15,87%). However, the participants felt that they were not getting adequate feedback from the national level (10/15,67%). The overall rank for acceptability was good (47/60, 78%).

Sensitivity

From the records reviewed from the database, 1,184 suspected cases were identified. Sensitivity was regarded as the ability to detect suspected cases as per the case definition. Stool samples were collected from 696 suspected cases. Out of the samples tested, only 11.5% (80/696) tested positive for rotavirus, which indicates poor sensitivity.

Timeliness

Timeliness was defined by the period of specimen collection and the time the specimen is received in the laboratory. Timeliness was defined as good when at least 90% of specimens collected were received in the laboratory within three days. Timeliness for sample arrival to the laboratory was very good, 95.2%. The timeliness of sending back the results from the laboratory to the ward was not documented in the database.

Data quality (completeness)

Data quality was generally poor. Many variables were missing in the database. Out of the 104 variables in the database, only 45 variables had entries 45/104(43.3%). On the date the specimen was received in the laboratory, 472/1184(39.9%) were missing, and for Enzyme Linked Immunosorbent Assay (ELISA) test results, 488/1184(41.2%) were missing. The overall rank for data quality was poor 488/1184,41.2%)

Discussion

Overall, the usefulness of the rotavirus surveillance system at KCH was rated good, as it met its objective to monitor the epidemiological pattern and the trends of rotavirus. The system was able to identify rotavirus cases across the years of study. This suggests that the system is effective in several critical areas. However, two key indicators were rated poorly: the estimation of rotavirus magnitude due to poor quality data, and the development of national policy strategy for the national immunization program. This indicates gaps in the system’s ability to provide accurate epidemiological data and inform policy decisions. Additionally, data for use for basis of epidemiological research was rated poor, reflecting potential issues in the quality or comprehensiveness of data being collected. Overall, the system’s usefulness was rated as average, indicating room for improvement, particularly in areas critical for public health planning and response.

Among the seven attributes assessed, simplicity, flexibility, and usefulness were rated average. Acceptability and timeliness were rated good, while sensitivity and data quality were rated poorly. This evaluation revealed that the sensitivity of the rotavirus surveillance system in Kilifi was low (11.5%). These findings relate to the Rotavirus Surveillance System evaluation in Yemen, which was assessed to be poor with a score of 16%[8]. This indicates that the system’s ability to correctly identify true cases of rotavirus infection was significantly lacking. Despite the overall usefulness score being average and other attributes like flexibility and stability rated positively, the low sensitivity highlights a critical area needing improvement to enhance the system’s effectiveness in monitoring rotavirus morbidity and mortalityThe true picture of the rotavirus burden during this period could have been different if all the data were documented.

Despite the surveillance system being simple for use by the staff, poor data quality was noted. Crucial variables were missing or not captured in the database. The missing information could impede specific interventions, decision-making, and planning for programs. This shortfall could be due to inadequate support supervision to monitor the data process in the hospital and to give feedback to the staff on the system’s performance. These findings are similar to findings from a study in Ethiopia that pointed out inadequate supervision affects monitoring data in the healthcare system[9]

The COVID-19 pandemic may have affected the stability of the system even though the system continued functioning during the period. These are related to the results of a study on how the COVID-19 pandemic affected Kenya’s essential health services, which revealed that regular services were disrupted during that time. [10] COVID-19 received increased attention, and resources—both human and financial—were redirected toward reaction, prevention, and control efforts. [10] More attention was drawn towards COVID-19, where financial resources and human resources were diverted towards response, prevention, and control activities.

The surveillance system’s flexibility was rated average. The system was notably good at accommodating changes in case definitions, suggesting it can adapt to evolving epidemiological knowledge The system’s ability to accommodate changes in funding was ranked poorly. However, the system’s ability to be maintained after withdrawal of the partners’ support was rated average. These observations contradict the evaluation results of the surveillance system in Yemen[8] which showed donor dependency as a weakness to the surveillance system. This poor flexibility in financial adjustments could hinder the system’s responsiveness to changing resource needs. This highlights significant areas for improvement, especially in financial aspects.

One of the better elements was acceptance, which was ranked well on three of the four indicators. Strong user support and engagement were demonstrated by the participants’ willingness to participate and the general sense that the system served its intended purpose. Nonetheless, there was a noticeable lack of satisfaction with the national-level feedback, pointing to a communication breakdown that might affect user motivation and the monitoring system’s efficacy. These findings relate to findings from a qualitative assessment of data quality in health centres in Ethiopia, which revealed that weak or absent supervision on data reporting resulted in low morale and heavy workload, leading to incomplete or delayed data entry and low systemic verification of data before reporting [9]. Acceptability was rated as good overall; however, this element might be further improved by upgrading the feedback methods.

The stability indicators collectively received an average rank, indicating that the system is moderately stable. Stability is essential for the consistent operation of a surveillance system, but the average score suggests there may be fluctuations or issues that need addressing to ensure ongoing, reliable performance. These findings are similar to the findings from a surveillance system evaluation on Neglected Tropical Diseases in Kenya, which indicated moderate reliability with areas to be strengthened[11]

Simplicity was another area with a mixed assessment. The availability and use of case definitions and reporting tools were rated highly, demonstrating that the system is straightforward in these aspects. However, training and the frequency of training were only average, and the time required for data collection and transmission was ranked poor. This indicates that while the system may be easy to understand, practical issues in training and data handling processes reduce its overall simplicity. These findings are similar to those from a study that found that health care workers reported that forms were cumbersome, training was insufficient, and data collection demanded too much time[11]. The simplification of training procedures and data management could enhance system performance.

Limitation

Our evaluation had limitations. One is the purposive selection of respondents to be interviewed while assessing the qualitative attributes which may have introduced selection and information biases. Some of the quantitative attributes were not evaluated because of missing data/information in the database. The scope of the evaluation was conducted within a limited time at KCH, representing one of the RVS sites therefore may not reflect the performance of the system in other regions of Kenya.

Conclusion

The Rotavirus Surveillance System (RVSS) at Kilifi County Hospital demonstrates value in tracking epidemiological patterns, fulfilling one of its core objectives. However, critical challenges persist—particularly in data quality, completeness, and the system’s ability to accurately estimate disease burden and mortality. These limitations undermine its potential to effectively support national immunization policy development. Operational simplicity is evident in the availability of clear case definitions and tools, yet gaps in training and data management processes reduce overall efficiency. Additionally, over-reliance on partner funding compromises financial flexibility and long-term sustainability. Targeted improvements in data quality, staff capacity, and funding structures are essential to enhance the system’s reliability, responsiveness, and utility in guiding rotavirus prevention efforts

Recommendations

The overall score for the surveillance system was average. Improving the poorly performing attributes will increase the performance of the surveillance system. The system’s low sensitivity might limit the detection of cases, estimation, and monitoring of the burden of rotavirus in the population. For the sustainability of the system, gradual planning to substitute the donor funds with the government should be considered. Data collection and recording should be improved and strengthened through support, supervision and training. We recommend regular data quality audits at the facility and mentorship on data entry.

What is already known about the topic

- Rotavirus remains a major cause of severe diarrhea in children under five globally.

- Surveillance systems help track disease burden and assess the impact of vaccination programs.

- WHO and CDC recommend evaluating surveillance systems to ensure quality and effectiveness.

- Prior studies show strengths in usefulness and acceptability, but gaps exist in data quality, timeliness, and sustainability.

- In Kenya, few rotavirus surveillance evaluations have been done at the county level.

What this study adds

- Provides the first evaluation of the rotavirus surveillance system at Kilifi County Referral Hospital.

- Confirms the system is useful and acceptable for detecting rotavirus trends and informing the response.

- Identifies key weaknesses: delays in reporting, incomplete data, and dependence on donor-funded laboratory support.

- Recommends strengthening feedback, enhancing local data use, and planning for long-term system sustainability.

Acknowledgements

We sincerely thank the Ministry of Health, Kenya, for their support and for providing the necessary permissions and data needed for this study. We also appreciate the guidance from the Division of Disease Surveillance and the Kenya Field Epidemiology and Laboratory Training Programme (KFELTP). Gratitude to the management and the staff of Kilifi County Hospital for their contribution towards the success of this study.

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. Does the system data provide an estimate of rotavirus incidence, magnitude, and mortality | 13 (52) | Poor |

| 2. Does the system data detect trends of rotavirus spread over time | 22 (88) | Good |

| 3. Are the high-risk groups recognized by the system? | 21 (84) | Good |

| 4. Does the system use its data to plan for resources targeting prevention and control | 20 (80) | Good |

| 5. Is the system data used in development and updating the national policy strategy in the national immunization program | 12 (48) | Poor |

| 6. Are the effects of intervention monitored by the system data | 23 (92) | Good |

| 7. Are they able to use the data collected to estimate the needed laboratory kits? | 20 (80) | Good |

| 8. Is the data generated from the system used for basic epidemiological research? | 17 (68) | Average |

| Overall usefulness (total = 200) | 148 (74) | Average |

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. Does the system accommodate the change in case definition | 23 (92) | Good |

| 2. Does the system accommodate changes in funding? | 10 (40) | Poor |

| 3. Does the system accommodate any changes in reporting | 18 (72) | Average |

| 4. Does the system accommodate integration with other surveillance systems | 16 (64) | Average |

| Overall flexibility (total = 100) | 67 (67) | Average |

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. System outages were experienced during the period under review? | 15 (60) | Average |

| 2. The stability of the system will be maintained after the withdrawal of the partner support? | 15 (60) | Average |

| 3. A little time is required for data management | 16 (64) | Average |

| 4. Reports are released regularly to and from both levels? What about feedback? | 15 (60) | Average |

| Overall stability (Total = 100) | 61 (61) | Average |

References

- Walker CLF, Rudan I, Liu L, Nair H, Theodoratou E, Bhutta ZA, et al. Global burden of childhood pneumonia and diarrhoea. Lancet [Internet]. 2013 Apr 20;381(9875):1405-16. Available from: https://linkinghub.elsevier.com/retrieve/pii/S0140673613602226 doi: 10.1016/S0140-6736(13)60222-6

- Tate JE, Burton AH, Boschi-Pinto C, Steele AD, Duque J, Parashar UD. 2008 estimate of worldwide rotavirus-associated mortality in children younger than 5 years before the introduction of universal rotavirus vaccination programmes: a systematic review and meta-analysis. Lancet Infect Dis [Internet]. 2012 Feb;12(2):136-41. Available from: https://linkinghub.elsevier.com/retrieve/pii/S1473309911702535 doi: 10.1016/S1473-3099(11)70253-5

- Tate JE, Rheingans RD, O’Reilly CE, Obonyo B, Burton DC, Tornheim JA, et al. Rotavirus disease burden and impact and cost-effectiveness of a rotavirus vaccination program in Kenya. J Infect Dis [Internet]. 2009 Nov 1;200(Suppl 1):S76-84. Available from: https://academic.oup.com/jid/article-lookup/doi/10.1086/605058 doi: 10.1086/605058

- Patel MM, Glass R, Desai R, Tate JE, Parashar UD. Fulfilling the promise of rotavirus vaccines: how far have we come since licensure? Lancet Infect Dis [Internet]. 2012 Jul;12(7):561-70. Available from: https://linkinghub.elsevier.com/retrieve/pii/S1473309912700294 doi: 10.1016/S1473-3099(12)70029-4

- Ministry of Health (Kenya). National policy guidelines on immunization 2013 [Internet]. Nairobi: Ministry of Health (Kenya); 2014 Jan [cited 2025 Jul 3]. Available from: https://www.medbox.org/document/kenya-national-policy-guidelines-on-immunization-2013

- Otieno GP, Bottomley C, Khagayi S, Adetifa I, Ngama M, Omore R, et al. Impact of the introduction of rotavirus vaccine on hospital admissions for diarrhea among children in Kenya: a controlled interrupted time-series analysis. Clin Infect Dis [Internet]. 2020 Jun 10;70(11):2306-13. Available from: https://academic.oup.com/cid/article/70/11/2306/5572563 doi: 10.1093/cid/ciz912

- German RR, Lee LM, Horan JM, Milstein RL, Pertowski CA, Waller MN, et al. Updated guidelines for evaluating public health surveillance systems: recommendations from the Guidelines Working Group. MMWR Recomm Rep [Internet]. 2001 Jul 27;50(RR-13):1-35. Available from: https://www.cdc.gov/mmwr/preview/mmwrhtml/rr5013a1.htm

- Lardi EA, Al Kuhlani SS, Al Amad MA, Al Serouri AA, Khader YS. The rotavirus surveillance system in Yemen: evaluation study. JMIR Public Health Surveill [Internet]. 2021 Jun 8;7(6):e27625. Available from: https://publichealth.jmir.org/2021/6/e27625 doi: 10.2196/27625

- Chekol A, Ketemaw A, Endale A, Aschale A, Endalew B, Asemahagn MA. Data quality and associated factors of routine health information system among health centers of West Gojjam Zone, northwest Ethiopia, 2021. Front Health Serv [Internet]. 2023 Mar 24;3:1059611. Available from: https://www.frontiersin.org/articles/10.3389/frhs.2023.1059611/full doi: 10.3389/frhs.2023.1059611

- Kiarie H, Temmerman M, Nyamai M, Liku N, Thuo W, Oramisi V, et al. The COVID-19 pandemic and disruptions to essential health services in Kenya: a retrospective time-series analysis. Lancet Glob Health [Internet]. 2022 Sep;10(9):e1257-67. Available from: https://linkinghub.elsevier.com/retrieve/pii/S2214109X22002856 doi: 10.1016/S2214-109X(22)00285-6

- Ng’etich AKS, Voyi K, Mutero CM. Evaluation of health surveillance system attributes: the case of neglected tropical diseases in Kenya. BMC Public Health [Internet]. 2021 Feb 23;21:396. Available from: https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-021-10443-2 doi: 10.1186/s12889-021-10443-2

Menu, Tables and Figures

On Pubmed

On Google Scholar

Navigate this article

Tables

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. Does the system data provide an estimate of rotavirus incidence, magnitude, and mortality | 13 (52) | Poor |

| 2. Does the system data detect trends of rotavirus spread over time | 22 (88) | Good |

| 3. Are the high-risk groups recognized by the system? | 21 (84) | Good |

| 4. Does the system use its data to plan for resources targeting prevention and control | 20 (80) | Good |

| 5. Is the system data used in development and updating the national policy strategy in the national immunization program | 12 (48) | Poor |

| 6. Are the effects of intervention monitored by the system data | 23 (92) | Good |

| 7. Are they able to use the data collected to estimate the needed laboratory kits? | 20 (80) | Good |

| 8. Is the data generated from the system used for basic epidemiological research? | 17 (68) | Average |

| Overall usefulness (total = 200) | 148 (74) | Average |

Table 1: The overall usefulness of the Rotavirus surveillance system at Kilifi County Referral Hospital, 2017–2022, N=25

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. Does the system accommodate the change in case definition | 23 (92) | Good |

| 2. Does the system accommodate changes in funding? | 10 (40) | Poor |

| 3. Does the system accommodate any changes in reporting | 18 (72) | Average |

| 4. Does the system accommodate integration with other surveillance systems | 16 (64) | Average |

| Overall flexibility (total = 100) | 67 (67) | Average |

Table 2: Flexibility of the rotavirus surveillance system at Kilifi County Referral Hospital, 2017–2021, N=25

| Indicator | Score n (%) | Rank |

|---|---|---|

| 1. System outages were experienced during the period under review? | 15 (60) | Average |

| 2. The stability of the system will be maintained after the withdrawal of the partner support? | 15 (60) | Average |

| 3. A little time is required for data management | 16 (64) | Average |

| 4. Reports are released regularly to and from both levels? What about feedback? | 15 (60) | Average |

| Overall stability (Total = 100) | 61 (61) | Average |

Table 3: Stability of the rotavirus surveillance system in Kilifi County Referral Hospital, Kenya, 2017–2021, N=25

Figures

Keywords

- Rotavirus

- Surveillance system

- Vaccination

- Diarrhoea